🤖 Quando as Máquinas Começam a Tomar Decisões Morais

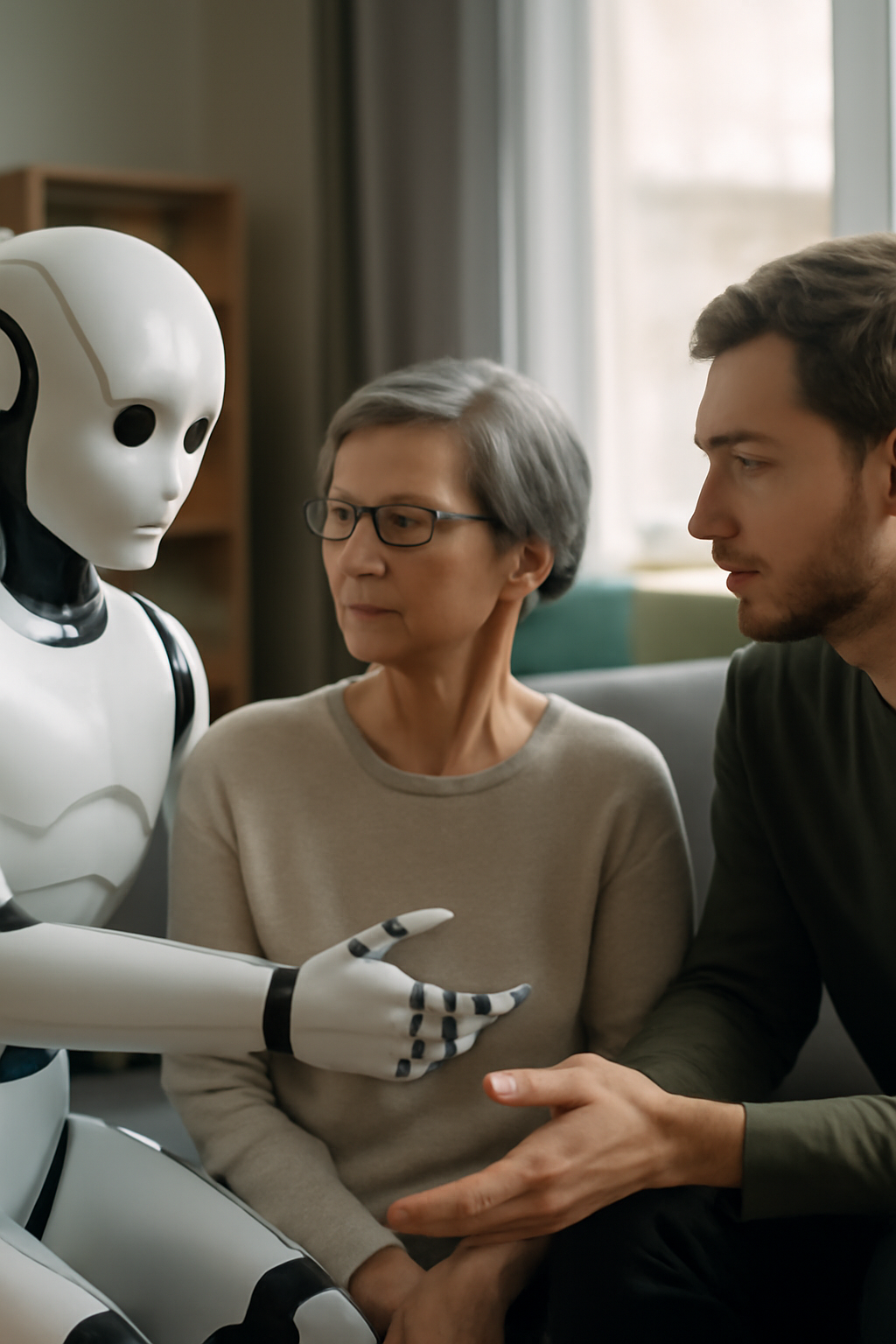

Imagine estar na sua sala de estar e observar um robô doméstico agir de forma injusta com alguém de quem você gosta. O que você faria? Interviria? Desligaria o aparelho? Ou questionaria os designers que moldaram a lógica por trás daquele comportamento?

Dilemas como esse definem a nossa era de tecnologia inteligente. Hoje, compartilhamos espaço com dispositivos que escutam, interpretam e tomam decisões. Todo algoritmo carrega um conjunto de valores — explícitos ou ocultos. A verdadeira pergunta para a humanidade não é o que a tecnologia pode fazer, mas o que ela deveria fazer.

O que Torna um Dilema Ético?

Um dilema ético exige escolher entre princípios morais concorrentes, todos com peso e consequências. No mundo das máquinas, essas escolhas não surgem da emoção, mas de dados e design. Quando sistemas agem de forma injusta, isso acontece porque aprenderam padrões que os humanos inseriram em sua “memória”.

Imagine um robô doméstico se recusando a falar com um convidado devido ao tom de voz ou ao sotaque. Por trás desse comportamento aparentemente absurdo pode existir um modelo de dados treinado com amostras de fala enviesadas. Cada linha de código pode carregar uma visão de mundo — às vezes de forma silenciosa, às vezes de maneira prejudicial.

A responsabilidade ética não começa nas máquinas, mas nos humanos que lhes ensinam padrões, linguagem e prioridades.

A Crescente Intimidade da Tecnologia com a Vida Humana

🧠 Um Robô Pode Aprender Compaixão?

Hoje, as máquinas nos cumprimentam, organizam agendas e até respondem ao nosso humor. No entanto, a empatia — núcleo da consciência moral — resiste à programação. Um robô pode simular cuidado, mas não pode senti-lo. A diferença entre simulação e sinceridade define o horizonte ético que se aproxima.

Exemplos do cotidiano:

Assistentes de voz: caixas de som inteligentes respondem instantaneamente, mas podem compreender melhor alguns idiomas do que outros, refletindo os pontos cegos culturais de quem as programou.

Robôs de atendimento: em hotéis ou lojas, recepcionistas automatizados precisam reconhecer todas as pessoas de forma igual. Se priorizam um tipo específico de cliente, a discriminação se torna digitalizada.

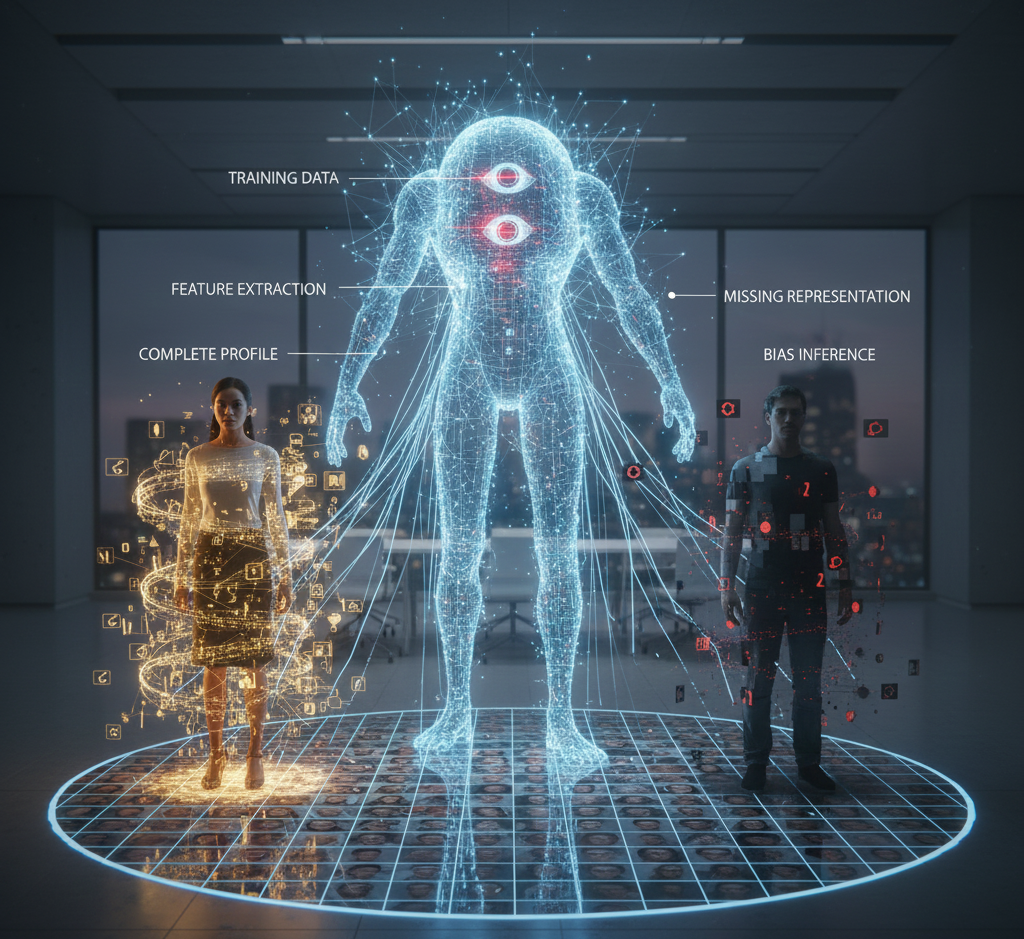

Quando Algoritmos Aprendem o Preconceito

🧩 O Preconceito Humano Dentro da Máquina

Dados nunca são neutros. Sistemas treinados em realidades desiguais inevitavelmente as reproduzem. Modelos de reconhecimento facial têm mais dificuldade com tons de pele mais escuros. Algoritmos de recrutamento descartam candidatos que fogem dos “padrões” históricos. Esses erros revelam uma verdade incômoda: a tecnologia amplifica injustiças já existentes na sociedade.

Não podemos esperar que máquinas desaprendam preconceitos que nós mesmos nos recusamos a reconhecer. Ética em IA não é sobre criar computadores perfeitos — é sobre nos tornarmos humanos mais conscientes.

Quando o Sistema Age de Forma Injusta

Imagine que o robô da sua casa trate um visitante de maneira diferente. O que você deveria fazer?

Ensinar: tentar corrigir o comportamento, redefinindo o que é uma resposta respeitosa.

Desligar: traçar um limite moral, abrindo mão do conforto para proteger a dignidade.

Denunciar: responsabilizar o fabricante pela falta de supervisão ética.

Cada caminho exige pesar conveniência contra consciência — um teste não da tecnologia, mas da nossa humanidade.

Por Que a Educação Ética é Essencial

🎓 Formando Programadores Conscientes

A próxima geração de criadores terá um poder imenso nas mãos. Por isso, a educação precisa unir competência técnica e raciocínio moral. Programar sem consciência constrói injustiça eficiente; programar com ética gera inovação sustentável.

Debate e diálogo: estudantes devem discutir como o viés surge nos dados.

Ética em STEM (princípios morais de quem faz ciência e cria tecnologia): todo projeto científico ou tecnológico precisa considerar seu impacto humano e ambiental.

Inclusão no design: ensinar diversidade garante ferramentas a favor — e não contra — a empatia.

Somente sociedades fluentes em empatia conseguem produzir algoritmos que sirvam à humanidade, em vez de substituí-la.

Responsabilização e Dever Moral

⚖️ Quem Responde pelo Erro de uma Máquina?

Quando carros autônomos interpretam mal a realidade ou sistemas de crédito reproduzem desigualdades, a responsabilidade não pode desaparecer “na nuvem”. Programadores, líderes empresariais e formuladores de políticas compartilham a autoria moral. A responsabilidade precisa permanecer humana, porque as consequências sempre são.

O Papel da Empatia e da Inclusão no Design

💡 Valores Humanos Incorporados ao Código

Diversidade entre os criadores gera mais justiça nas máquinas. Auditorias de empatia, ferramentas de transparência e sistemas de IA explicável são avanços importantes. Ainda assim, nada substitui uma cultura de design ético — onde toda inovação começa com a pergunta: “Quem pode ser prejudicado por isso?”

Reflexão Final: O Espelho da Humanidade

Quanto mais inteligentes as máquinas se tornam, mais claramente elas refletem quem somos. O desafio moral não é ensinar ética aos robôs, mas praticá-la nós mesmos. Conveniência não pode superar consciência; automação jamais deve substituir responsabilidade.

“A inteligência das máquinas sempre será medida pela empatia de quem as constrói.”

Portanto, se um dia o robô da sua casa tratar alguém mal, lembre-se: você segura mais do que um controle remoto. Você segura o projeto do que significa ser humano.